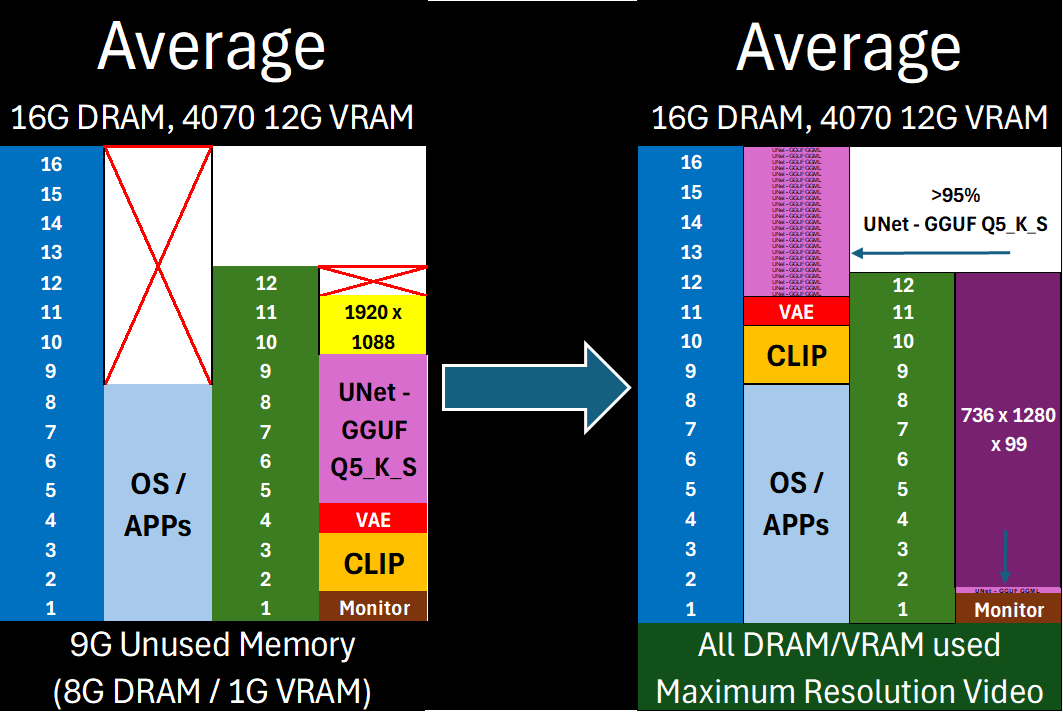

Free almost all of your GPU for what matters: Maximum latent space processing

- Universal .safetensors Support: Native DisTorch2 distribution for all

.safetensorsmodels. - Up to 10% Faster GGUF Inference versus DisTorch1: The new DisTorch2 logic provides potential speedups for GGUF models versus the DisTorch V1 method.

- Bespoke WanVideoWrapper Integration: Tightly integrated, stable support for WanVideoWrapper with eight bespoke MultiGPU nodes.

What is DisTorch? Standing for "distributed torch", the DisTorch nodes in this custom_node provide a way of moving the static parts of your main image generation model known as the UNet off your main compute card to somewhere slower, but one that is not taking up space that could be better used for longer videos or more concurrent images. By selecting one or more donor devices - main CPU DRAM or another cuda/xps device's VRAM - you can select how much of the model is loaded on that device instead of your main compute card. Just set how much VRAM you want to free up, and DisTorch handles the rest.

-

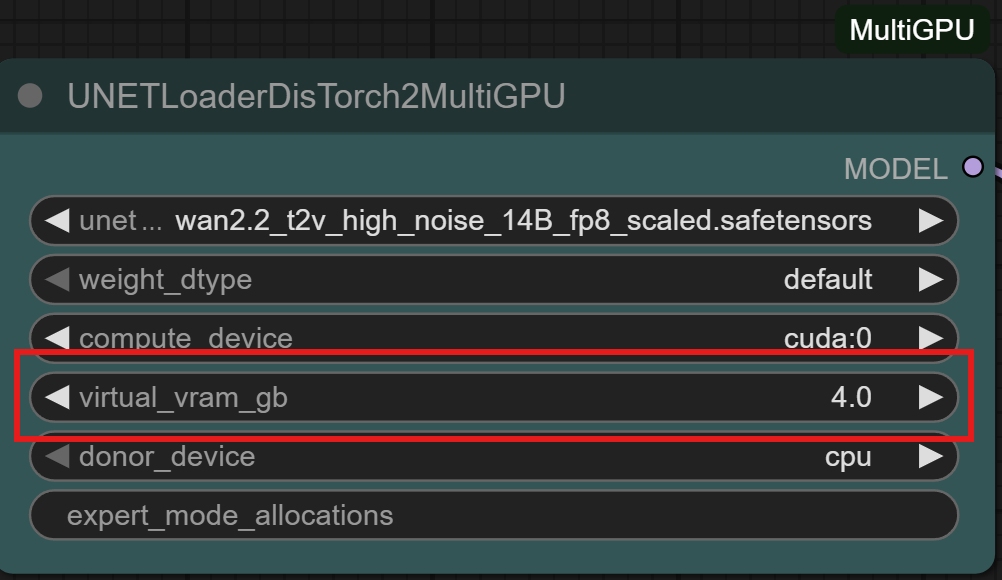

Virtual VRAM: Defaults to 4GB - just adjust it based on your needs

-

Two Modes:

- Donor Device: Offloads to device of your choice, defaults to system RAM

- Expert Mode Allocation: Arbitrarily assign parts of the Unet across ALL available devices - Fine-grained control on exactly where your models are loaded! Choose each device and what percent of that device is to be allocated for ComfyUI model loading and let ComfyUI-MultiGPU do the rest behind the scenes!

-

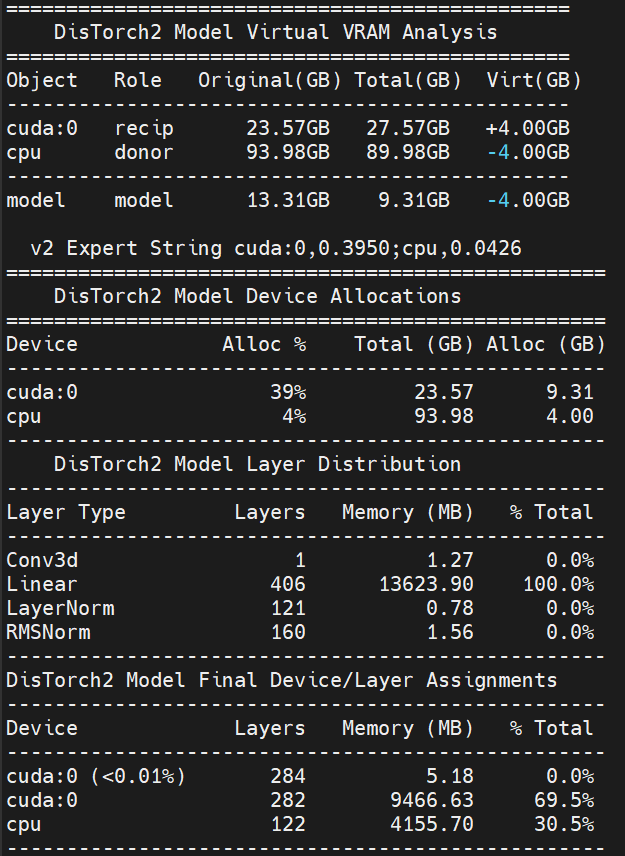

Hint: Every run using the standard

virtual_vram_gballocation scheme creates its own v2 Expert String listed in the log.- Example:v2 Expert String cuda:0,0.2126;cpu,0.0851 = 21.26% of cuda:0 memory and 8.51% of CPU memory are dedicated to a model in this case.

- Play around and see how the expert string moves for your devices. You'll be custom tuning in no time!

- Free up GPU VRAM instantly without complex settings

- Run larger models by offloading layers to other system RAM

- Use all your main GPU's VRAM for actual

compute/ latent processing, or fill it up just enough to suit your needs and the remaining with quick-access model blocks. - Seamlessly distribute .safetensors and GGUF layers across multiple GPUs if available

- Allows you to easily shift from on-device speed to open-device latent space capability with a simple one-number change

DisTorch Nodes with one simple number to tune its Vitual VRAM to your needs

Works with all .safetensors and GGUF-quantized models.

⚙️ Expert users: Like .gguf or exl2/3 LLM loaders, use the expert_mode_alloaction for exact allocations of model shards on as many devices as your setup has!

The new Virtual VRAM even lets you offload ALL of the model and still run compute on your CUDA device!

Installation via ComfyUI-Manager is preferred. Simply search for ComfyUI-MultiGPU in the list of nodes and follow installation instructions.

Clone this repository inside ComfyUI/custom_nodes/.

The extension automatically creates MultiGPU versions of loader nodes. Each MultiGPU node has the same functionality as its original counterpart but adds a device parameter that allows you to specify the GPU to use.

Currently supported nodes (automatically detected if available):

- Standard ComfyUI model loaders:

- CheckpointLoaderSimpleMultiGPU/CheckpointLoaderSimpleDistorch2MultiGPU

- CLIPLoaderMultiGPU

- ControlNetLoaderMultiGPU

- DualCLIPLoaderMultiGPU

- TripleCLIPLoaderMultiGPU

- UNETLoaderMultiGPU/UNETLoaderDisTorch2MultiGPU, and

- VAELoaderMultiGPU

- WanVideoWrapper (requires ComfyUI-WanVideoWrapper):

- WanVideoModelLoaderMultiGPU & WanVideoModelLoaderMultiGPU_2

- WanVideoVAELoaderMultiGPU

- LoadWanVideoT5TextEncoderMultiGPU

- LoadWanVideoClipTextEncoderMultiGPU

- WanVideoTextEncodeMultiGPU

- WanVideoBlockSwapMultiGPU

- WanVideoSamplerMultiGPU

- GGUF loaders (requires ComfyUI-GGUF):

- UnetLoaderGGUFMultiGPU/UnetLoaderGGUFDisTorch2MultiGPU

- UnetLoaderGGUFAdvancedMultiGPU

- CLIPLoaderGGUFMultiGPU

- DualCLIPLoaderGGUFMultiGPU

- TripleCLIPLoaderGGUFMultiGPU

- XLabAI FLUX ControlNet (requires x-flux-comfy):

- LoadFluxControlNetMultiGPU

- Florence2 (requires ComfyUI-Florence2):

- Florence2ModelLoaderMultiGPU

- DownloadAndLoadFlorence2ModelMultiGPU

- LTX Video Custom Checkpoint Loader (requires ComfyUI-LTXVideo):

- LTXVLoaderMultiGPU

- NF4 Checkpoint Format Loader(requires ComfyUI_bitsandbytes_NF4):

- CheckpointLoaderNF4MultiGPU

- HunyuanVideoWrapper (requires ComfyUI-HunyuanVideoWrapper):

- HyVideoModelLoaderMultiGPU

- HyVideoVAELoaderMultiGPU

- DownloadAndLoadHyVideoTextEncoderMultiGPU

All MultiGPU nodes available for your install can be found in the "multigpu" category in the node menu.

All workflows have been tested on a 2x 3090 + 1060ti linux setup, a 4070 win 11 setup, and a 3090/1070ti linux setup.

- Default DisTorch2 Workflow

- FLUX.1-dev Example

- Hunyuan GGUF Example

- LTX Video Text-to-Video

- Qwen Image Basic Example

- WanVideo 2.2 Example

- FLUX.1-dev 2-GPU GGUF

- Hunyuan 2-GPU GGUF

- Hunyuan CPU+GPU GGUF

- Hunyuan GGUF DisTorch

- Hunyuan GGUF MultiGPU

If you encounter problems, please open an issue. Attach the workflow if possible.

Currently maintained by pollockjj. Originally created by Alexander Dzhoganov. With deepest thanks to City96.